VA.gov Chatbot - Product owner

Skills: Product Management | Conversation Design | UX Research | Collaboration

Team Role: Lead Product Owner & Manager

Team Structure: 5 developers, 1 Project Manager, 1 Product Manager, 1 QA, and 2 UX Analysts

my challenge

I started this project while working at the Department of Veterans Affairs as a USDS employee (later transitioning to the Dept. of Veterans Affairs). At the time, the Veteran Experience Office (VEO) was actively leading live chat and chatbot discovery sessions. They had chosen Microsoft Dynamics 365 (Power Virtual Agent) as the technical solution (though I do not endorse the product).

As Product Owner, my role was to take the discovery findings, identify research gaps, and lead a team toward implementing and launching a VA.gov chatbot designed to reduce call center volume.

I encountered a few challenges:

Many key stakeholders do not understand the complexities of building a chatbot, especially for a complex site such as Va.gov. In response, I began a communication and evangelization program that includes:

Weekly listening meetings allow stakeholders to be heard while giving us valuable information that can make or break the product timeline.

Weekly demo days, where stakeholders (and really anyone) can see how products are progressing, and help us unblock things when necessary. I found that direct involvement with stakeholders eases fears and co-ops people who can underwise block momentum when they do not understand how a product may impact their day-to-day.

Created a “Chatbot Quick Facts” document to address typical myths around chatbots and help manage expectations.

Active participation in higher-up leadership meetings where I can once again help to ease concerns while navigating real challenges.

Microsoft Power Virtual Agent has some pros and many cons. In an ideal world, I would have come before a decision was made, directed a technology discovery, and aligned my team’s allocation and skillsets toward a customizable technology like Rasa.

Funding. As with all Government programs, the chatbot depends on continuous financing; this is perhaps the most stressful challenge because chatbots are a “living thing” that requires constant discovery and improvement. I have to participate in what I call “defensive fiscal spikes,” where I write yearly documentation that validates the chatbot.

Content. Like all Government websites - VA.gov has a content repository of biblical proportions. Much of the content is maintained by siloed teams, making it difficult for a “self-service” bot to digest timely and customer-friendly content.

Crisis prevention. Sadly, many Veterans experience suicidal ideations. My first concern with building an open forum platform like chatbots is that the AI can potentially harm a Veteran with an insensitive reply or no reply when a Veteran is looking for help. I led a series of crisis workshops with stakeholders and VA’s resident suicidologist to manage this. Together, we agreed to write a disclaimer recommending that Veterans not use the bot for mental health purposes.

Sample “crisis” workshop

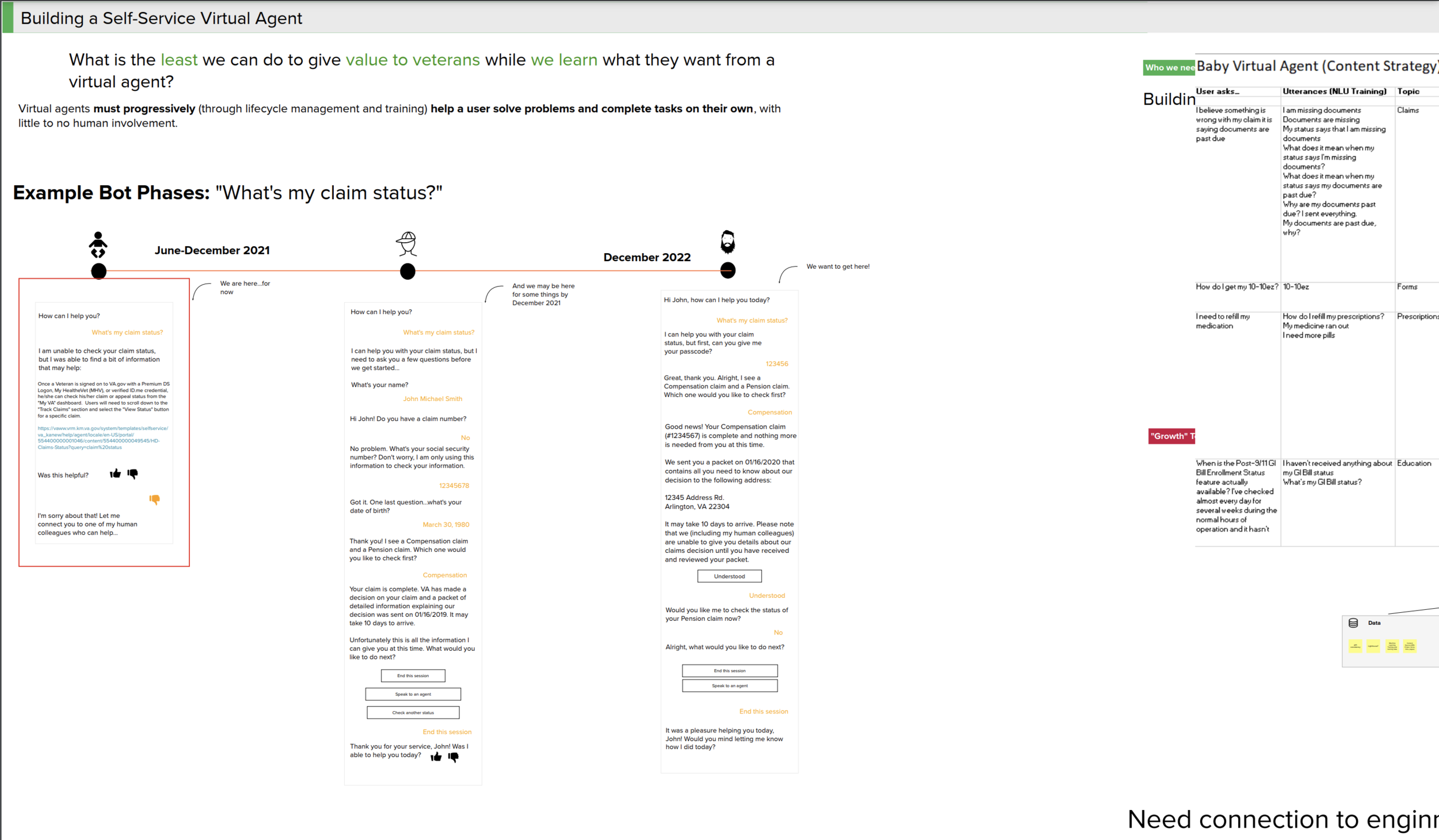

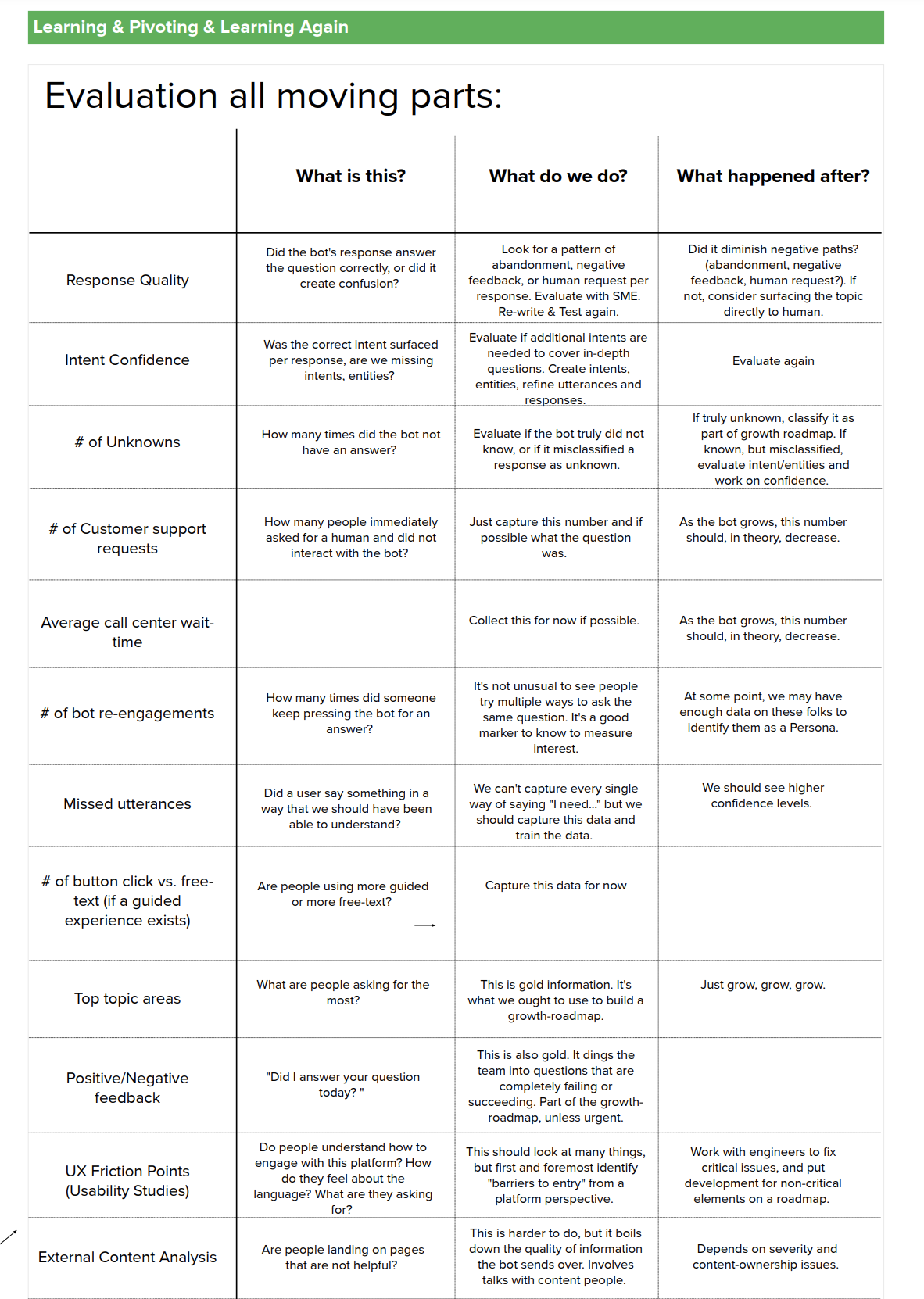

Creating a continuous learning strategy

From a stakeholder perspective, the primary goal of the bot is to reduce call center calls. However, to have any positive impact on call center calls, virtual agents must progressively (through lifecycle management, continuous discovery, and AI training) help people solve problems and complete tasks on their own, with little to no human involvement.

First, using existing VA Personas, I identified that people meant:

Veterans and caretakers of every war era, gender, sexual orientation, gender identity, age, economic condition, and physical and technical accessibility.

Stakeholders.

Political affiliates, journalists, and others.

Knowing that bots need to be helpful to reduce call center calls, we needed to learn what this population considers a valuable chatbot. To help us get to the answer, we decided to build a Proof of Value or Learning Model. I phrased the problem question as:

“What is the least we can do to give value to Veterans while we learn what they want from a virtual agent?”

building the Proof of Value (POV)

Part of my role is to manage stakeholder expectations. To my mind, chatbots are better able to enhance the quality of calls, by helping call center agents answer more complex questions versus self-service questions. To kickstart this project, we made the following assumption that a virtual agent could provide value to some Veterans (not everyone likes chatbots) by:

Increasing awareness of existing VA self-service tools.

Decreasing the time Veterans spend waiting for an outcome.

Giving Veterans 24/7 access to either anonymous or secure support.

In a few months, we built an unauthenticated Proof of Value that had:

A greeting with suggested questions to start an open-text interaction

Approximately 100 unauthenticated bot-responses covering health benefits and claims drafted from content on Va.gov

Responses for questions the bot cannot answer

Ability to surface a phone number to reach a "human"

Recognition and responses for Veterans experiencing a crisis

Guidelines and information for those needing immediate help

POV brainstorming

Unauthenticated Proof of Value

What did we learn?

We led a total of five major research initiatives, including investigations into voice and tone and tests with Veterans with visual impairments. While all studies had favorable results, for this portfolio, I will display a summary of the results of the main study initiative that allowed Veterans and caretakers to play with the chatbot prototype unmoderated:

Unmoderated Controlled Study with the Proof of Value in a staging environment:

Recognizing Gaps & Moving forward

Remember that we assumed that a bot could add value by:

Increasing awareness of existing VA self-service tools.

Decreasing the time Veterans spend waiting for an outcome.

Giving Veterans 24/7 access to either anonymous or secure support.

In our study, we learned that Veterans do want a bot. Many of their survey responses validated the above assumptions:

What Next? Authentication & MVP Releases

While the unauthenticated chatbot was successful - Veterans unanimously asked for a bot that could speak to personalized questions. Through the help of Booz Allen Hamilton, my team analyzed a series of call center call transcript data to assess low-hanging-fruit call-topics that we could easily surface through API calls.

We discovered that Veterans often call the VA to check the status of their claims and appeals. My next task was to build a user flow that we could use to validate this assumption with Veteran Benefits Affairs staff.

Fortunately, both claims and appeals data are accessible via APIs at the VA. By leveraging the LightHouse API, the bot can retrieve compensation claims and appeals information seamlessly.

To address authentication, we implemented ID.me credentials at the bot level. This allows the unauthenticated bot to connect with the authenticated bot when a Veteran asks a question requiring authentication. Additionally, it ensures that a Veteran’s logged-in state is retained when they begin their journey by authenticating on VA.gov site-wide.

Currently (2024 - 2025)

The past year has been a busy one! Here’s a quick rundown:

Expanded the chatbot into a VA-wide platform, allowing other departments to build conversational experiences. One partner developed, hosted, and managed an authenticated prescription skill that deflected 30 calls from call center agents in its first two weeks.

Built a robust (but still evolving) transcript analysis tool that leverages machine learning to categorize transcripts by topic. This has helped us quickly identify content gaps and opportunities for growth.

Continuously validated a key challenge—many user questions are highly personal and depend on contextual data or content we don’t have. This remains a major hurdle!

Successfully linked our disability claims feature to the claims call center, allowing us to track chatbot-driven call deflection. In a 7-day scan, we saw a 90% reduction in calls—a huge win!

Usability testing confirmed that Veterans want faster escalation when the chatbot can’t provide an answer. In 2025, we’re prioritizing direct escalation to phone calls, live agent chats, and customer support ticketing.

Tested OpenAI to accelerate chatbot training by summarizing the totality of VA.gov’s content. Unfortunately, out of 300 sample responses, a significant portion contained hallucinations. Worse, Veterans struggled to distinguish between accurate and inaccurate information. As a result, we decided to put OpenAI on hold. In 2025, we plan to experiment with retrieval-augmented generation (RAG) to see if it reduces hallucinations while improving response accuracy.

Excited to build on this momentum in the year ahead! 🚀